2060 SUPER or 2070 SUPER?

Daz 3D Forums > General > The Commons>2060 SUPER or 2070 SUPER?

2060 SUPER or 2070 SUPER?

LenioTG

Posts: 2,118

LenioTG

Posts: 2,118

Hi everyone :D

Since here there are many experts for this thematic, I'd like to ask you a suggestion about GPU upgrade.

My current configuration is

- CPU: Ryzen 5 3600

- RAM: 32 (4x8) Gb 3000Mhz (actually I use them all, but since I have no empty slots, I don't want to spend 200+ euros to go to 64Gb).

- PSU: Corsair CX450M

- GPU: RTX 2060

Since I often travel between two cities, and I have two PCs, but one of them has no GPU, I'm going to purchase a new one.

I like the RTX 2060, but since these new models with 8Gb of VRAM came out (and sometimes I go out of VRAM with 6Gb), I was thinking about the RTX 2060 SUPER or the 2070 SUPER (in Italy the 2070 is not worth it).

About the RTX 2060 SUPER in Italy

- It costs 445,30€

- Videocardbenchmark: 13806

- Octanebench: 203

- RT Cores: 34

- CUDA Cores: 2176

About the RTX 2070 SUPER

- It costs 585,99€ (+32%) (but I would need 99 more euros to change the PSU, Corsair RM750, if I got it, so it would cost 684,98€)

- Videocardbenchmark: 14849 (+8%)

- Octanebench: 220 (+8%)

- RT Cores: 40 (+18%)

- CUDA Cores: 2560 (+18%)

I also game a little bit, and my monitor is 60Hz 2160p.

I would like to not upgrade anything in the next 1,5 years at least.

What would you do in my stead?

Thank you in advance!

Post edited by LenioTG on

Comments

bigger number is better. CUDA cores, I was told, matters a lot in DAZ Studio ... I don't even know what they are, but only you can deicde if the extra $$$ is worth the jump up.

Wow, they are marking up those cards even more there. Based on the specs & the price difference I'd go for the 2060 Super.

If you are not in a hurry, another tech savvy person in the forums (Outrider42) recommends waiting for the nVidia Ampere architecture GPUs sometime in 2020 model year. What you want is improvements in the realtime ray tracing capabilities of those GPUs to make extremely fast, almost realtime 4K iRay renders. The current models I've read do HD resolution (1280x720) realtime ray traces in a second or two once the drivers are done and working for them (which they aren't yet unfortunately).

I would buy a new GPU before Christmas!

I think it would be too soon anyway for the Ampere cards. Maybe they'll go out with a Quadro first or something like that.

Even if they went out in 2020, it would be late 2020, so early 2021 before the prices would stabilize, and last time Daz implemented the RT cores, it took almost another year. So, I don't think we'll see those improvements in Daz before early 2022, and at that point I could buy a 3080 or something like that :)

Yep, Italy has one of the highest tax burden in the whole world.

But the price is VAT included.

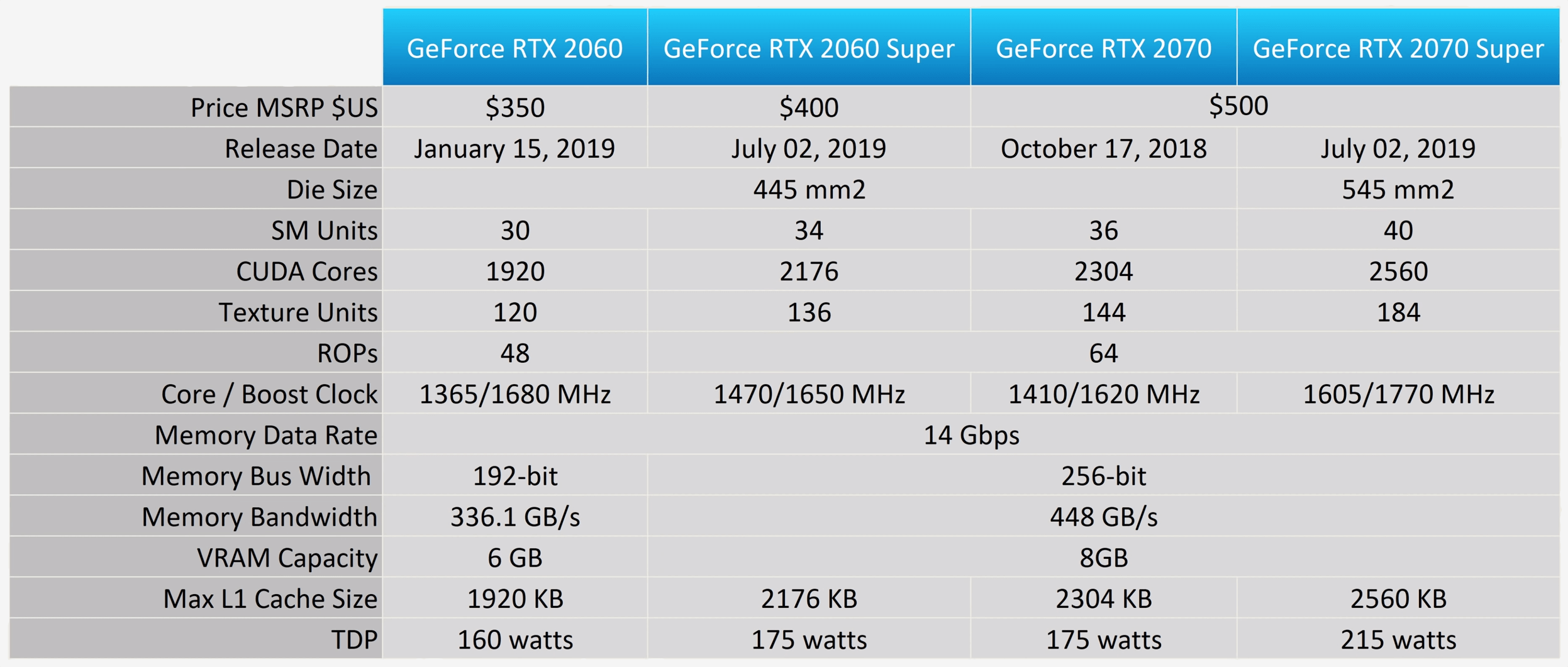

RTX 2060 SUPER: 445,30€ = 490,64$, +23% compared to those 400$ in the image.

RTX 2070 SUPER: 585,99€ = 645,65$, +29%.

And the GPD per capita here is 34321$, compared to 62869$ of the US (-45%).

If you multiply that to the majored prices, here those GPUs cost, respectively, +51% and +64% xD

But this is also the Black Friday week, so these prices are a little bit higher than usual.

Thanks, I'll add the CUDA cores in the first post.

the 2070 Super is obviously superior. It is not 32% more though so if cost is a primary factor in your decision making then get the 2060 Super. I have a 2070, which the 2060 closely matches, and am very happy with it.

Well... I have those extra 140€, but I'm not sure it would be worth it!

I'm thinking about it :)

It would be awesome if Nvidia release a card with 100000 or one million Cuda cores. However I will not buy it, instead I will buy an entry level within 3000 or 100000 Cuda card.

I guess I'm not a hobbyist anymore! But this is not my job either.

I often use ManFriday's render queue, but sometimes I do not.

I use Iray viewport quite a lot to test things out, but I don't know if that 10/20% performance increase would really make a difference.

I make VN's and use the renderqueue pretty much every day. If this is about making money you really have to figure out out is how long renders take a major impact on your work flow. Going tio a better card will let you get more renders done. Which only matters if you have more renders to do.

Simple Facts:

More Cuda = More Speed

More RAM = Large Scene

At least here in Germany one would be well advised not to buy expensive computer hardware in the month of December... often the prices drop quite a bit after christmas, due to shops trying to get rid of stuff in their storage that they had gotten for the christmas sale and that hasn't sold...

That's a smart observation!

I'd like to know how much of a difference it would make in the first place. Spending 32% more for a 8% improvement is not worth it, for a 20% one it may be!

Indeed!

Well, I need it before half January.

I was waiting for 20% off warehouse deals for the Black Friday, but this year they didn't apply that discount to GPUs :(

The benchmarking thread could be a useful stop, as it specifically tracks performance in DS. The quoted 40% increase in performance is almost enough to make me suspect the results, but if it actually delivered that, it would be a very impressive boost in performance:

https://www.daz3d.com/forums/discussion/341041/daz-studio-iray-rendering-hardware-benchmarking/p1

I've checked it out, it's not been that active since August.

Where have you read about that 40% improvement?

I don't know which thread you've looked at, but the one I linked has had plenty of activity in the last month, and it has benchmark results for both of those cards, despite them being new. And if you should trust those benchmarks, 2060 Super benchmarks at about 4.4 iterations/s and the 2070 Super at 6.2 iterations/sec - about a 40% increase.

That does seem like rather more of a leap than I was expecting, so I almost wonder if a mistake has been made somewhere, but those are the numbers given.

Nvidia likes to announce their new generations of GPU's at one of a series of events in the summer. I very much doubt they'll deviate. I would expect the announcement of the initial lineup in late 20 with cards becoming available by mid July. I strongly doubt Nvidia can move up the physical release. Ampere is on Samsung's 7nm node. Samsung is still getting their 7nm process ramped up. I'd assume they are producing engineering and qualification samples right now but they likely can't start mass production soon enough to materially affect the street availability.

Oh wow, I had seen the previous thread, now I see it's been abandoned for this one! That's the data I needed, thanks :D

It's a great thread, it's very organized!

Iterations per second:

This is way more than I expected!

And it would justify that expense.

Yes, I have to move my only GPU for hundreds of kilometers right now, I don't really want to wait for more than one year :)

I haven't taken that exact test, but I probably will!

I hadn't realized that the Beta uses a newer Iray plugin, and I'm currently using the stable version.

There is also this web site that has cumulative benchmarks for CPUs and GPUs. CUDAs are important and with more expensive cards you will get more but with the RTX cards it is the realtime ray tracing that really speeds things up beyond CUDA. The drivers to make it all work in DAZ Studio isn't quite there yet but will be. No worries though because just like the more expensive cards have more CUDA they also have more SM. SM how more more complex than CUDAs we might guess looking at the paucity of them compared to CUDAs but I guess ray tracing requires a lot of complexity.

SM means streaming multiprocessors which in the data I looked at is always equal to the number of ray tracing cores. It's like you're getting a miniature 30, 34, 36, or 40 core CPU that is specialized to do only ray tracing. I'm sure programmers could also leverage that capability to do other things as well.

https://www.passmark.com/

https://www.cpubenchmark.net/

https://www.videocardbenchmark.net/

UPDATE: I got the new PSU (Corsair RM750X) anyway, so I don't have to consider that price anymore.

It was on discount for the Cyber Monday and I couldn't resist xD

Thank you, the benchmarks I've posted in the first post are from videocardbenchmark.net :D

I didn't know about the audio stuff!

I have to say that, even if I game on a 4K monitor, for that regard my 2060 is already enough.

I usually play Europa Universalis IV and The Binding of Isaac anyway, and they don't require a lot of graphics.

And I've tried either Call of Duty WWII and Control at 4K, I don't know the FPS, but it looked fluid to me.

That's a nice observation.

Yes, I've personally bought 5 AMD CPUs, and just an Intel one.

But all of the three GPUs I've bought so far were Nvidia. Now that I mostly use Daz, I don't think I could use their cards.

At this moment, there are no reliable RTX 2070 Super sold by Amazon.it under 800€. But I can wait until the start of January.

Outside of Amazon, there's a store that sells it for 518,91. But since it's the only one, I'm afraid they don't pay the VAT. There were some cases in Italy some years ago, and when they closed the stores, the last customers paid without receiving anything.

For the 2060 Super, it's 446,52 now.

That same store sells it for 392,71, but no one else manages to apply a price lower than Amazon.

The problem with the mainstream benchtests, is that they cater primarily to gamers, and the few that do have a (usually minimal) test for rendering, do not test on iray, since that's an nvidia exclusive, which means they can't compare it with Radeon cards. Gaming and iray rendering are two *totally* different things. The only thing they have in common, is that they use videocards. So, for those mainly interested in iray rendering, the benchmark thread on these forums is the only useful comparison we can make.

Some other interesting tidbit you won't hear anywhere else: for iray, it basically doesn't matter whether you have a relatively cheap nvidia card, or a top of the line card of the same model with all the bells and whistles. An MSI RTX 2070 Super Ventus renders almost exactly the same as the $85 more expensive Asus Rog Strix RTX 2070 Super Advanced. If $140 is a consideration whether a 2070S would be worth it over a 2060S, then rest assured that for iray renderering, the $85 difference in price is no consideration at all: it's just not worth it, the difference doesn't make tens off percents, the difference won't even make a single percent. Maybe your local climate would be a consideration to get a card with better cooling, but for overal performance, it just ain't worth it.

I picked up a RTX 2070 Super about a week ago and very happy that I did. The improvement (vs. dual 970s) has been incredible.

If I had to say anything bad, it would be... The 2070 Super is a beast in terms of size and weight. It took quite a bit of maneuvering and finagling to get the thing in and secured.

The size of it made routing the power leads a bit challenging. Lining up the rear was easy, but getting it secured in place was tricky (i.e. lining up the screw holes and getting the screws in place). This last bit was absolutely essential as with its weight, and the aniticipated vibration, I wanted to make sure the stress on my motherboard was eased.

I agree about the benchmarks :)

I'm not looking for a RGB strix version or something like that, but I would avoid some PNY/Manli/Zotac versions.

That's because I care about noise, and all my system is very silent. This PSU I just bought doesn't even start the fan for daily use wattage.

The climate in Italy can be pretty hot.

If I'll take it, I'll make sure to screw everything into place, thanks :D

I again. That's why I mentioned the number of SM/RT cores which is what's going to be very important in iRay & ray tracing. However, I don't know how much each SM/RT core added adds to the speed of an iRay render as there are really no standardized and widely tested benchmark tests for that.

So while the video card tests at passmark.com are OK for older benchmarks they say nothing about the 30, 34, 36, or 40 SM/RT cores available just in the line of 2060 / 2070 nVidia cards. Is 40 SM/RT core substantially faster than 36 SM/RT core in ray tracing, say 20% or is it just straight math with the 36 SM core being 90% as fast as the 40 SM core card? Which is decent when talking 4K that runs for minutes or hours but 9/10s of a second on a 4K render compared to a second, well the OS doesn't even guarantee you'll get a notice on the OS screen UI telling you the render is done within a second of it completing so that's not that big a deal.

One last thing, if you get the RTX 2070 Super, make sure you double check it for protective covers and plastic film. Sounds like a silly statement but...

The gray / black plastic interface covers are almost the same color as the board itself. And there were a lot of them. The obvious ones were easy to spot and remove, but there were three to four more, some of which were small and not obvious.

The protective plastic film was every where too. The smaller sections weren't obvious and were not connected to the bigger parts. So I had to go hunting for all of those as well.

It seems a bit more complex than that. There's the RT Cores and there's our "old fashioned" Cuda Cores.

Cuda Cores are pretty straightforward: more of these equals more calculations at once, equals faster rendertimes.

RT Cores seem to be somehow involved with how the Cuda Cores calculate stuff, more of these equals the Cuda Cores performing more efficiently, equals faster rendertimes.

But how these two interact exactly isn't clear. It's just fairly clear that the two noticably increase performance when combined.

The benefit of the RT cores might also depend on what's in the scene being rendered, some scenes might benefit more from the addition of RT cores than others.

So, more Cuda Cores is always better. More RT cores is also always better, but milage varies with these. I do find it interesting that as little as 40 SM/RT cores has such a noticable impact on render speeds, compared to the thousands of Cuda Cores that go with them. So it seems logical that there'd be a noticable difference between 34 SM/RT cores and 40 SM/RT cores. Whether there's an upper limit to how many of these cores would be beneficial is something only nvidia knows. Maybe their next generation just doubles the amount of these cores for a 20% increase in performance. Maybe doubling the amount is overkill and would have no benefit at all.

Migenius has been working with Iray for a while and they have both a benchmark and a breakdown of how RTX performance works.

https://www.migenius.com/articles/rtx-performance-explained

Like Drip said, CUDA is pretty straightforward, the performance gains with pure CUDA across any two GPUs were consistent across all types of scenes. If GPU A is 2X faster than GPU B in the bench, then you can be pretty sure that GPU A will always be 2X faster than GPU B. However ray tracing cores are different, and the performance scales differently depending on how much actual ray tracing is needed in a given scene. So like in our bench, the 2070 Super is 40% faster than the 2060 Super. But it may not always be 40% depending on how you build your scenes. In general, the more geometrically complex a scene gets, the more the RT cores come into play. So the more complex a scene gets (compared to the benchmark scene), then the 2070 Super may be more than 40% faster. A scene that is simpler than the benchmark scene might be a little less than 40%.

https://www.migenius.com/products/nvidia-iray/iray-rtx-2019-1-1-benchmarks

However they have not benched many RTX cards. So the only place for a RTX Iray benchmark is...here. Our forum is the only place where you can find all of the RTX cards benched with Iray RTX. I keep that thread in my signature.

There is another scene that might push RTX more, that thread is here https://www.daz3d.com/forums/discussion/344451/rtx-benchmark-thread-show-me-the-power/p1 This is the thread LenioTG probably found. People stopped using it, but it is still a good little benchmark to run as it gives it us another data point. With dforce strand hair becoming a thing, people who use that type of hair could be the ones to benefit most going by how the benchmarks have been. I'd certainly like to see more people use that bench as well, because it really does seem to show us the power of RTX like its name suggests. Unfortunately nobody has compiled the benches into a chart.

Cool, thanks for that. I need to get up to speed on how they scale.

Tensor cores are pretty straightforward, they do matrix math very very fast. Turns out matrix math is a big part of AI and therefore tensor cores greatly speed up AI training.

The RT cores are optimized for doing vector math. Both ray tracing and surround sound use a lot of vector math. AIUI the limiting factor is that using RT cores for real time ray tracing already has a significant performance impact so adding surround sound processing to the RT cores isn't really worth it yet.